k8s 클러스터 설치(kubeadm+crio+calico)

Linux VM Images - Download VirtualBox and VMware Images

Linux VM Images - Download VirtualBox and VMware Images

www.linuxvmimages.com

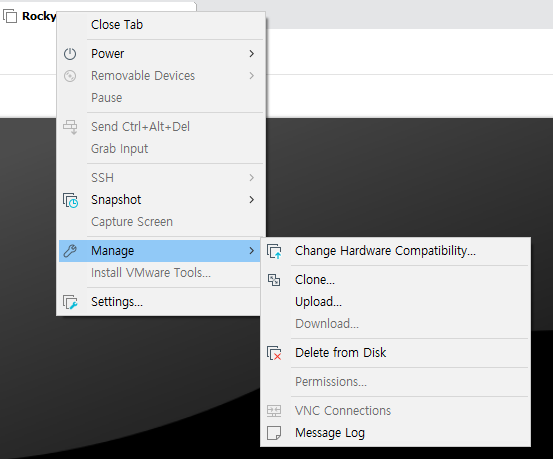

VM Down 후 Cpied it을 통해 드라이브 생성

+-+-+-+-+-+-+-+-+-+-+-+-+-++-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-++-+-+-+

LINUXVMIMAGES.COM

+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

User Name: rockylinux

Password: rockylinux (sudo su -)

Last failed login: Fri May 19 04:27:00 EDT 2023 from 192.168.111.1 on ssh:notty

There was 1 failed login attempt since the last successful login.

Last login: Fri May 19 04:24:47 2023

[rockylinux@rocky8 ~]$

[rockylinux@rocky8 ~]$ sudo su -

Last login: Fri May 19 04:25:18 EDT 2023 on tty1

[root@rocky8 ~]#

[root@rocky8 ~]# systemctl stop firewalld

[root@rocky8 ~]#

[root@rocky8 ~]# systemctl disable firewalld

[root@rocky8 ~]#

[root@rocky8 ~]#

[root@rocky8 ~]#

[root@rocky8 ~]#

[root@rocky8 ~]# vi /etc/sysconfig/selinux

SELINUX=disabled

"/etc/sysconfig/selinux" 14L, 547C

[root@rocky8 ~]# nmcli con mod ens160 ipv4.address 192.168.111.201/24 \

ipv4.gateway 192.168.111.2 ipv4.dns 192.168.111.2 ipv4.method manual connection.autoconnect yes

[root@rocky8 ~]# nmcli con down ens160

Connection 'ens160' successfully deactivated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/2)

[root@rocky8 ~]# nmcli con up ens160

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/4)

[root@m1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.111.201 m1

192.168.111.202 w1

192.168.111.203 w2

192.168.111.204 w3

[root@m1 ~]# ping w1

PING w1 (192.168.111.202) 56(84) bytes of data.

64 bytes from w1 (192.168.111.202): icmp_seq=1 ttl=64 time=0.411 ms

^C

--- w1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.411/0.411/0.411/0.000 ms

[root@m1 ~]#

* 쿠버네티스 클러스터는 스왑 메모리를 사용하지 않는다.

[root@m1 ~]# free -h

total used free shared buff/cache available

Mem: 1.7Gi 206Mi 1.3Gi 8.0Mi 202Mi 1.4Gi

Swap: 2.0Gi 0B 2.0Gi

[root@m1 ~]#

[root@m1 ~]# swapoff -a

[root@m1 ~]# vi /etc/fstab

# /etc/fstab

# Created by anaconda on Sun May 22 17:48:33 2022

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/rl-root / xfs defaults 0 0

UUID=ca3d8be4-42a5-4b84-9c9e-60bd45ea0173 /boot xfs defaults 0 0

/dev/mapper/rl-home /home xfs defaults 0 0

#/dev/mapper/rl-swap none swap defaults 0 0

[root@m1 ~]# free -h

total used free shared buff/cache available

Mem: 1.7Gi 204Mi 1.3Gi 8.0Mi 201Mi 1.4Gi

Swap: 0B 0B 0B포트와 프로토콜

물리적 네트워크 방화벽이 있는 온프레미스 데이터 센터 또는 퍼블릭 클라우드의 가상 네트워크와 같이 네트워크 경계가 엄격한 환경에서 쿠버네티스를 실행할 때, 쿠버네티스 구성 요소에서

kubernetes.io

[root@m1 ~]# systemctl start firewalld

[root@m1 ~]# firewall-cmd --add-port={6443,2379-2380,10251,10252,10257,10259,5473,179}/tcp --permanent

success

[root@m1 ~]# firewall-cmd --add-port={4789,8285,8472}/udp --permanent

success컨트롤플레인 설치시 포트

[root@w1 ~]# firewall-cmd --add-port={10250,30000-32767,5473,179}/tcp --permanent

success

[root@w1 ~]# firewall-cmd --add-port={4789,8285,8472}/udp --permanent

success워커노드 설치시 포트

[root@m1 ~]# firewall-cmd --reload

success

*커널 모듈 로딩 : 요청 받았을때 커널에 부착,탈착(load,unload) 할 수있는 코드 조각 - 재부팅 없이 커널 기능 추가

[root@m1 ~]# lsmod

Module Size Used by

nft_fib_inet 16384 1

nft_fib_ipv4 16384 1 nft_fib_inet

nft_fib_ipv6 16384 1 nft_fib_inet

nft_fib 16384 3 nft_fib_ipv6,nft_fib_ipv4,nft_fib_inet

nft_reject_inet 16384 4

-

-

-

etc.

[root@m1 ~]# modprobe overlay

[root@m1 ~]# modprobe br_netfilterlsmod : 현재 설치되어 사용중인 모듈 현황 확인.

modprobe 커널 모듈을 명시적으로 로딩

[root@w1 ~]# tee /etc/sysctl.d/k8s.conf<<EOF

> net.bridge.bridge-nf-call-iptables = 1

> net.bridge.bridge-nf-call-ip6tables = 1

> net.ipv4.ip_forward = 1

> EOF

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

[root@w1 ~]#[root@w1 ~]# vi /etc/sysctl.d/k8s.conf

[root@w1 ~]#

[root@w1 ~]# sysctl --system

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-coredump.conf ...

kernel.core_pattern = |/usr/lib/systemd/systemd-coredump %P %u %g %s %t %c %h %e

kernel.core_pipe_limit = 16

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.all.promote_secondaries = 1

net.core.default_qdisc = fq_codel

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /usr/lib/sysctl.d/50-libkcapi-optmem_max.conf ...

net.core.optmem_max = 81920

* Applying /usr/lib/sysctl.d/50-pid-max.conf ...

kernel.pid_max = 4194304

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

* Applying /etc/sysctl.conf ...

[root@w1 ~]#

[root@m1 ~]# lsmod | grep br_netfilter

br_netfilter 24576 0

bridge 278528 1 br_netfilter

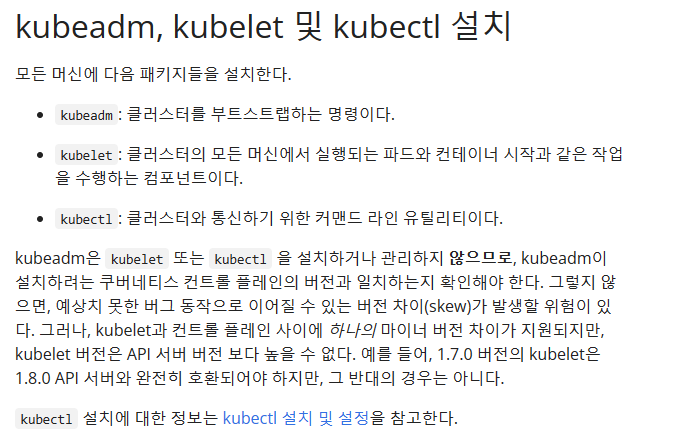

kubeadm 설치하기

이 페이지에서는 kubeadm 툴박스 설치 방법을 보여준다. 이 설치 프로세스를 수행한 후 kubeadm으로 클러스터를 만드는 방법에 대한 자세한 내용은 kubeadm으로 클러스터 생성하기 페이지를 참고한다.

kubernetes.io

[root@w2 ~]# cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

[root@w2 ~]# vi /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-$basearch

enabled=1

gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

~

"/etc/yum.repos.d/kubernetes.repo" 7L, 282C[root@m1 ~]# sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

Kubernetes 71 kB/s | 173 kB 00:02

Dependencies resolved.

=====================================================================================

Package Arch Version Repository Size

=====================================================================================

Installing:

kubeadm x86_64 1.27.2-0 kubernetes 11 M

kubectl x86_64 1.27.2-0 kubernetes 11 M

kubelet x86_64 1.27.2-0 kubernetes 20 M

-

-

[root@m1 ~]# sudo systemctl enable --now kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.[root@m1 ~]# kubectl version --client

WARNING: This version information is deprecated and will be replaced with the output from kubectl version --short. Use --output=yaml|json to get the full version.

Client Version: version.Info{Major:"1", Minor:"27", GitVersion:"v1.27.2", GitCommit:"7f6f68fdabc4df88cfea2dcf9a19b2b830f1e647", GitTreeState:"clean", BuildDate:"2023-05-17T14:20:07Z", GoVersion:"go1.20.4", Compiler:"gc", Platform:"linux/amd64"}

Kustomize Version: v5.0.1

[root@m1 ~]#

[root@m1 ~]# kubectl version --short

Flag --short has been deprecated, and will be removed in the future. The --short output will become the default.

Client Version: v1.27.2

Kustomize Version: v5.0.1

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@m1 ~]# export OS=CentOS_8

[root@m1 ~]# export VERSION=1.27

[root@m1 ~]# echo $OS

CentOS_8

[root@m1 ~]# echo $VERSION

1.27[root@m1 ~]# curl -L -o /etc/yum.repos.d/devel:kubic:libcontainers:stable.repo https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/devel:kubic:libcontainers:stable.repo

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 359 100 359 0 0 354 0 0:00:01 0:00:01 --:--:-- 354

[root@m1 ~]#

[root@m1 ~]# curl -L -o /etc/yum.repos.d/devel:kubic:libcontainers:stable:cri-o:$VERSION.repo https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable:cri-o:$VERSION/$OS/devel:kubic:libcontainers:stable:cri-o:$VERSION.repo

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 425 100 425 0 0 459 0 --:--:-- --:--:-- --:--:-- 459

[root@m1 ~]# dnf -y install cri-o

Stable Releases of Upstream github.com/containers pa 11 kB/s | 47 kB 00:04

devel:kubic:libcontainers:stable:cri-o:1.27 (CentOS_ 54 kB/s | 215 kB 00:03

Last metadata expiration check: 0:00:02 ago on Mon 22 May 2023 03:50:21 AM EDT.

Dependencies resolved.

=====================================================================================

Package Arch Version Repo Size

=====================================================================================

Installing:

cri-o x86_64 1.27.0-1.2.el8 devel_kubic_libcontainers_stable_cri-o_1.27

35 M

-

-

Complete!

[root@m1 ~]#

[root@m1 ~]# systemctl daemon-reload

[root@m1 ~]# systemctl enable --now crio

Created symlink /etc/systemd/system/cri-o.service → /usr/lib/systemd/system/crio.service.

Created symlink /etc/systemd/system/multi-user.target.wants/crio.service → /usr/lib/systemd/system/crio.service.

[root@m1 ~]# systemctl enable --now kubelet

이제부터는 컨트롤플레인(마스터노드)에서 진행

[root@m1 ~]# kubeadm config images pull

W0522 04:07:14.748533 14272 images.go:80] could not find officially supported version of etcd for Kubernetes v1.27.2, falling back to the nearest etcd version (3.5.7-0)

[config/images] Pulled registry.k8s.io/kube-apiserver:v1.27.2

[config/images] Pulled registry.k8s.io/kube-controller-manager:v1.27.2

[config/images] Pulled registry.k8s.io/kube-scheduler:v1.27.2

[config/images] Pulled registry.k8s.io/kube-proxy:v1.27.2

[config/images] Pulled registry.k8s.io/pause:3.9

[config/images] Pulled registry.k8s.io/etcd:3.5.7-0

[config/images] Pulled registry.k8s.io/coredns/coredns:v1.10.1

[root@m1 ~]#[root@m1 ~]# kubeadm init

[init] Using Kubernetes version: v1.27.2

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Pulling images required for setting up a Kubernetes cluster

-

-

-

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.111.201:6443 --token 3mir9f.2452rl4nfcp0nt2w \

--discovery-token-ca-cert-hash sha256:ab67a47a09eba7ba044ac43033dd033e117d5aa8079ee754cf24656e3595251b

[root@m1 ~]#[root@m1 ~]# mkdir -p $HOME/.kube

[root@m1 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@m1 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@m1 ~]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.111.201:6443

CoreDNS is running at https://192.168.111.201:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

[root@m1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

m1 Ready control-plane 5m2s v1.27.2Install Calico networking and network policy for on-premises deployments | Calico Documentation

Install Calico networking and network policy for on-premises deployments.

docs.tigera.io

[root@m1 ~]# curl https://raw.githubusercontent.com/projectcalico/calico/v3.25.1/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 232k 100 232k 0 0 976k 0 --:--:-- --:--:-- --:--:-- 976k

[root@m1 ~]#

[root@m1 ~]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

[root@m1 ~]#[root@m1 ~]# kubectl get pods

No resources found in default namespace.

[root@m1 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-674fff74c8-7vqfc 1/1 Running 0 42s

kube-system calico-node-gb5jp 0/1 Init:2/3 0 42s

kube-system coredns-5d78c9869d-jdp76 1/1 Running 0 11m

kube-system coredns-5d78c9869d-tkgb7 1/1 Running 0 11m

kube-system etcd-m1 1/1 Running 0 11m

kube-system kube-apiserver-m1 1/1 Running 0 11m

kube-system kube-controller-manager-m1 1/1 Running 0 11m

kube-system kube-proxy-x4q57 1/1 Running 0 11m

kube-system kube-scheduler-m1 1/1 Running 0 11m

[root@m1 ~]#

워커노드 전체에 적용

[root@w1 ~]# kubeadm join 192.168.111.201:6443 --token 3mir9f.2452rl4nfcp0nt2w --disc overy-token-ca-cert-hash sha256:ab67a47a09eba7ba044ac43033dd033e117d5aa8079ee754cf246 56e3595251b

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet /kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@w1 ~]#[root@m1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

m1 Ready control-plane 15m v1.27.2

w1 Ready <none> 62s v1.27.2

w2 Ready <none> 62s v1.27.2

w3 Ready <none> 62s v1.27.2